Using artificial intelligence to measure the heart

Artificial intelligence can now help clinicians by automatically measuring the heart in ultrasound images. This can save time and may in the future enable inexperienced users to perform accurate measurements of the heart.

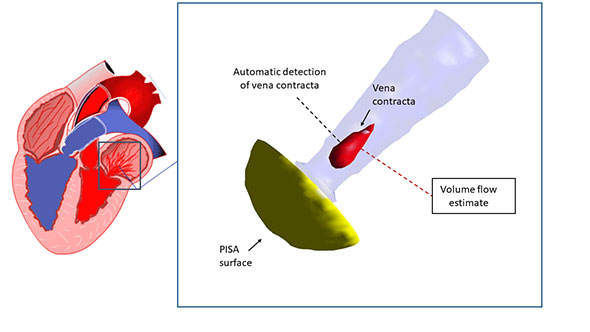

When performing ultrasound examinations of the heart, several measurements are done in order to determine how healthy the heart is. One of these measurements is left ventricular ejection fraction, which measures the pumping efficiency of the heart.

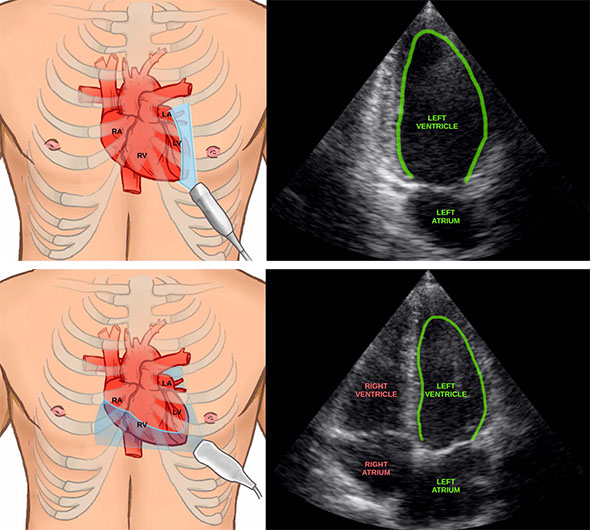

In order to calculate the ejection fraction, we need to calculate the volume of the heart chamber. To do this, clinicians have to acquire images from two perpendicular angles, also knownn as views, of the heart, and then manually draw the heart chamber wall on the images. This can be time-consuming, and is also subject to large interobserver variability, meaning that two different doctors may draw the heart chamber wall quite differently, thereby resulting in different volume measurements.

An inexperienced user may not even know were to draw the heart wall, an automatic tool may in the future enable these users to perform necessary measurements. At the Centre for Innovative Ultrasound Solutions (CIUS), we are working on completely automating ultrasound heart measurements using deep learning.

Learning by example

Deep learning is a type of machine learning which are algorithms that learn to solve a task by looking at a large amount of examples. Most machine learning methods today use what is called deep neural networks, which are inspired by the way human brains work. For instance, to create a neural network which can detect whether an image contains a dog or not, you collect a large set of images with and without dogs. You then train the neural network with these images, and for every image the system has to say whether the image contains dogs or not. If the result is incorrect, the neural network has to adjust itself, so that it will not make the same mistake again. By doing this many enough times and with enough variation in the input images, the system will learn what a dog

looks like automatically!

In order to automate the ejection fraction measurements, the neural networks have to learn several tasks such as:

- View classification: Learns to identify which images are of the different angles/views of the heart.

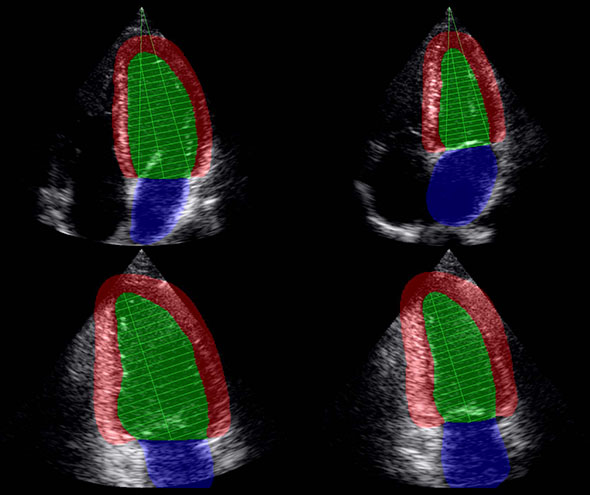

- Left ventricle segmentation: Learns to draw the heart chamber wall.

To learn these tasks, ultrasound images from several hundred patients were collected in cooperation with CREATIS in France and manually annotated by human experts. The neural networks are quite big, having several million parameters which have to be

adjusted during training.

Does it work in real-life?

The artificial intelligence methods were implemented in a high performance computing framework called FAST. This enables us to stream ultrasound data directly from a scanner and calculate the measurements in real-time while the operator is scanning as shown in this

video:

Together with clinician Ivar Mjåland Salte at the Sørlandet Hospital in Kristiansand, we performed a study to see how well our methods can do these measurements automatically compared to manually drawing the heart wall contour. Ultrasound recordings of 72 patients was collected and manually annotated. The neural network was able to automatically and quite accurately calculate ejection fraction for all patients, even for patients with low image quality ultrasound images.

Ejection fraction is only one of many clinical measurements of the heart, we are currently working on extending the neural networks to learn several other measurements, such as MAPSE, LV mass and strain.

An inexperienced user may not know where to place and how to angle the ultrasound probe correctly. This is necessary to get valid measurements of the heart. At CIUS, we are also developing an artificial intelligent assistant which can guide inexperienced users to acquire correct ultrasound images of the heart.

Erik Smistad

- Erik Smistad#molongui-disabled-link

- Erik Smistad#molongui-disabled-link